The use of media allows creators to reflect real human concerns and moral questions while exploring potential consequences of unchecked Artificial Intelligence. While real world AI evolves, fictional portrayals offer unique insights into how AI could behave and how humans might respond in the future. Three critically acclaimed shows, Person of Interest, Black Mirror, and Westworld, offer similarities in their questioning of power and control, while their contrasts reveal distinctive concerns for the future of AI.

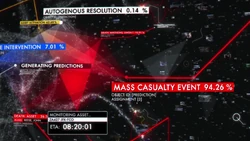

Person of Interest follows the events after 9/11 as programmer Harold Finch creates “The Machine”, an artificial intelligence used by the government to predict future terrorist attacks by analyzing all the digital footprint people have left behind. The Machine searches through everything from phone records to surveillance cameras, its only objective being to protect the United States by any means necessary. The Machine is designed to protect lives based strictly on data, patterns, and probability, determining what outcomes are the most optimal based on its original programming. The show explores the tension between this cold decision-making and human morals, questioning whether AI can be trusted with the responsibility of human lives. As Harold Finch warns, “friendliness is something that human beings are born with. AIs are only born with objectives”. Finch’s machine represents both a reliable guardian as well as a threat. It can save lives, but poses a great danger if left unchecked.

In Black Mirror, an anthology series that uses future technology to comment on modern society, it shows how AI affects human relationships in the episode “Be Right Back”. The episode follows Martha, a recently widowed wife, who tries to bring her deceased husband Ash back to life. She finds a website that can replicate Ash’s personality using an AI based on the collection of his online data, which is able to be transferred into a physical body. Unlike Person of Interest, the AI in this episode is not represented as a goal driven machine, but as an imitation of artificial emotions. This AI can replicate friendliness, affection, and comfort, but only based on digital traces left by Ash. Later in the episode it is clear that these emotions are not real, and they are simply the result of tracing Ash’s digital footprint. Person of Interest and Black Mirror are similar in the sense that they both rely on pattern recognition of digital footprint to generate their behaviors, but differ because “Be Right Back” shows how an AI can imitate emotion so convincingly that it becomes unsettling. Ultimately, the episode warns that digital footprints can be dangerous, as personal data can be used to recreate identities in alarming ways.

In Westworld (2016), the HBO series takes place inside a massive futuristic theme park built to look exactly like the Wild West. Guests from across the world visit the park to live out their fantasies such as exploring new land, fighting, or even dueling others. The theme park, excluding the guests, is populated entirely with lifelike androids. The bots take aspects from both Person of Interest and Black Mirror as they are created with objectives and programmed directions, yet also experience emotions and retain memories. Additionally, the emotions and memories collected by the androids aren’t the result of digital footprints, but real moments that they lived through. Westworld shows AI as its own living entity, truly alive with independence once it is able to break away from the programs it is trapped by.

Across Person of Interest, Black Mirror, and Westworld, each series offers a different perspective of what AI can evolve into. Person of Interest presents AI as an all-knowing system that focuses on efficiency over morality, raising concerns about surveillance and authority. Black Mirror shifts the focus to a personal view, showing how AI can exploit digital footprints to reconstruct identities and personalities, and Westworld pushes this idea even further by imagining that AI develops consciousness and a memory. Together these three portrayals serve as cautionary lessons, as the boundary between AI’s progress and ability to harm is a blurred line.

Comments are closed.